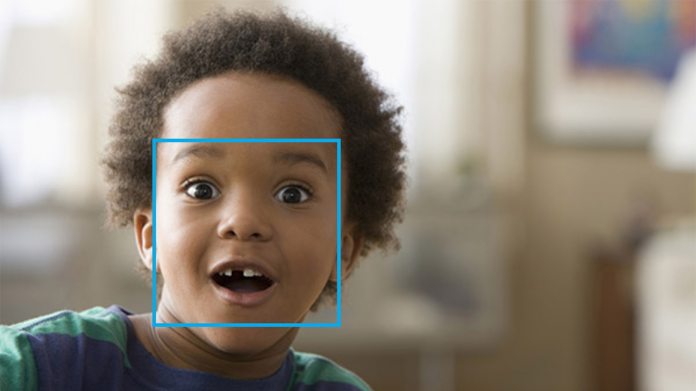

“The higher error rates on females with darker skin highlights an industrywide challenge: Artificial intelligence technologies are only as good as the data used to train them,” explained a spokesperson. “If a facial recognition system is to perform well across all people, the training dataset needs to represent a diversity of skin tones as well factors such as hairstyle, jewelry and eyewear.” While analyzing the software, engineers found that its technology worked best on males with lighter skin, and worst on females with darker skin. The error rate on those with darker skin has reduced by 20 times, while all women have a 9 times reduction.

ICE Controversy

To power the improvements, the Face API team revised training and benchmark datasets, collected new data, and improved its classifier for a higher degree of precision. “We had conversations about different ways to detect bias and operationalize fairness. We talked about data collection efforts to diversify the training data. We talked about different strategies to internally test our systems before we deploy them,” said Hanna Wallach, a senior researcher in Microsoft’s New York research lab. However, as Microsoft comes under fire for self-admittedly assisting the ICE with facial recognition, it’s unlikely the controversy will die down any time soon. Bias in AI is a huge issue, but there’s been little talk of who should have access in the first place. As Wallach said, “This is an opportunity to really think about what values we are reflecting in our systems, and whether they are the values we want to be reflecting in our systems.” Whatever your thoughts, Microsoft is opening the improved Face API to all of its customers via Azure Cognitive Services.